Rat in a Maze Using Reinforcement Learning

Applying Reinforcement Learning: Solving the Rat in a Maze Problem with Python

Reinforcement Learning (RL) is a type of machine learning that involves an agent interacting with an environment to learn how to take actions that maximize a reward signal. RL has been applied to many real-world problems, including robotics, game-playing, and autonomous driving. In this blog, we'll explore how RL can be used to solve the classic problem of the Rat in a Maze.

Problem Statement

The Rat in a Maze problem is a classic problem in computer science and is often used as an introductory problem for algorithms and data structures. The problem involves a rat that is placed in a maze and must find its way to the end of the maze. The rat can move up, down, left, or right, but can only move into a cell if it is not blocked. The goal of the problem is to find the shortest path from the start of the maze to the end of the maze.

Reinforcement Learning Approach

To solve the Rat in a Maze problem using RL, we need to define the problem in terms of an environment, an agent, and a reward signal. The environment is the maze, and the agent is the rat. The reward signal is a value that the agent receives when it takes an action in the environment. In this case, the reward signal is 1 if the rat reaches the end of the maze and 0 otherwise.

We'll use the Q-learning algorithm, a popular RL algorithm, to learn the optimal policy for the rat to follow. The Q-learning algorithm uses a Q-table to store the expected rewards for each action in each state. The Q-table is initialized with random values, and the algorithm updates the Q-values based on the rewards received for each action.

Implementation

We'll use Python to implement the RL approach to the Rat in a Maze problem. We'll use the Pygame library to create a graphical representation of the maze, and the NumPy library to store and manipulate the Q-table.

First, we'll define the maze as a 2D array of integers, where 0 represents a blocked cell and 1 represents an open cell. We'll use a 10x10 maze for this example:

maze = np.array([

[1, 1, 1, 1, 0, 1, 1, 1, 1, 1],

[1, 0, 1, 1, 1, 1, 0, 1, 1, 1],

[1, 0, 0, 1, 1, 1, 1, 0, 1, 1],

[1, 1, 0, 1, 0, 1, 1, 1, 0, 1],

[0, 1, 1, 1, 1, 1, 0, 1, 1, 1],

[1, 0, 1, 1, 0, 1, 1, 1, 0, 1],

[1, 1, 0, 1, 1, 1, 1, 0, 1, 1],

[1, 0, 1, 1, 1, 1, 0, 1, 1, 1],

[1, 0, 0, 1, 0, 1, 1, 1, 0, 1],

[1, 1, 1, 1, 1, 1, 1, 1, 1, 1]

])

Next, we'll initialize the Q-table with random values using NumPy:

q_table = np.random.rand(10, 10, 4)

The Q-table is a 3D array with dimensions (maze_width, maze_height, num_actions). The num_actions dimension represents the four possible actions: move up, move down, move left, and move right.

Next, we'll define a function to choose the next action for the rat based on the current state and the Q-values in the Q-table. We'll use the epsilon-greedy policy, which means that the rat will choose the action with the highest Q-value with probability (1 - epsilon), and a random action with probability epsilon.

def choose_action(state, epsilon):

if np.random.uniform(0, 1) < epsilon:

action = np.random.randint(4)

else:

action = np.argmax(q_table[state[0], state[1]])

return action

We'll also define a function to update the Q-values in the Q-table based on the reward received for the current action.

def update_q_table(state, action, reward, next_state, alpha, gamma):

old_value = q_table[state[0], state[1], action]

next_max = np.max(q_table[next_state[0], next_state[1]])

new_value = (1 - alpha) * old_value + alpha * (reward + gamma * next_max)

q_table[state[0], state[1], action] = new_value

The update_q_table function uses the Bellman equation to update the Q-value for the current state and action. Alpha is the learning rate, which controls how much the Q-value is updated based on the reward received. Gamma is the discount factor, which controls how much weight is given to future rewards.

Finally, we'll define the main loop that runs the Q-learning algorithm. In each iteration of the loop, the rat chooses an action, moves to the next state, receives a reward, and updates the Q-table.

epsilon = 0.1

alpha = 0.5

gamma = 0.9

state = (0, 0)

for i in range(10000):

action = choose_action(state, epsilon)

if action == 0 and state[0] > 0 and maze[state[0]-1, state[1]] == 1:

next_state = (state[0]-1, state[1])

elif action == 1 and state[0] < 9 and maze[state[0]+1, state[1]] == 1:

next_state = (state[0]+1, state[1])

elif action == 2 and state[1] > 0 and maze[state[0], state[1]-1] == 1:

next_state = (state[0], state[1]-1)

elif action == 3 and state[1] < 9 and maze[state[0], state[1]+1] == 1:

next_state = (state[0], state[1]+1)

else:

next_state = state

if next_state == (9, 9):

reward = 1

else:

reward = 0

update_q_table(state, action, reward, next_state, alpha, gamma)

state = next_state

print(q_table)

The main loop runs for 10,000 iterations and updates the Q-table based on the rat's actions and rewards. After the loop finishes, the Q-table contains the learned Q-values for each state-action pair.

Results

To visualize the shortest path found by the rat, we can use the Q-values in the Q-table to choose the next action at each state until the rat reaches the goal. We'll define a function called find_shortest_path that uses the Q-table to find the shortest path from the starting position to the goal position.

def find_shortest_path(q_table):

state = (0, 0)

path = [(0, 0)]

while state != (9, 9):

action = np.argmax(q_table[state[0], state[1]])

if action == 0 and state[0] > 0 and maze[state[0]-1, state[1]] == 1:

state = (state[0]-1, state[1])

elif action == 1 and state[0] < 9 and maze[state[0]+1, state[1]] == 1:

state = (state[0]+1, state[1])

elif action == 2 and state[1] > 0 and maze[state[0], state[1]-1] == 1:

state = (state[0], state[1]-1)

elif action == 3 and state[1] < 9 and maze[state[0], state[1]+1] == 1:

state = (state[0], state[1]+1)

else:

break

path.append(state)

return path

The find_shortest_path function starts at the starting position (0, 0) and chooses the action with the highest Q-value at each state until it reaches the goal position (9, 9) or gets stuck. The function returns a list of positions that represent the shortest path found by the rat.

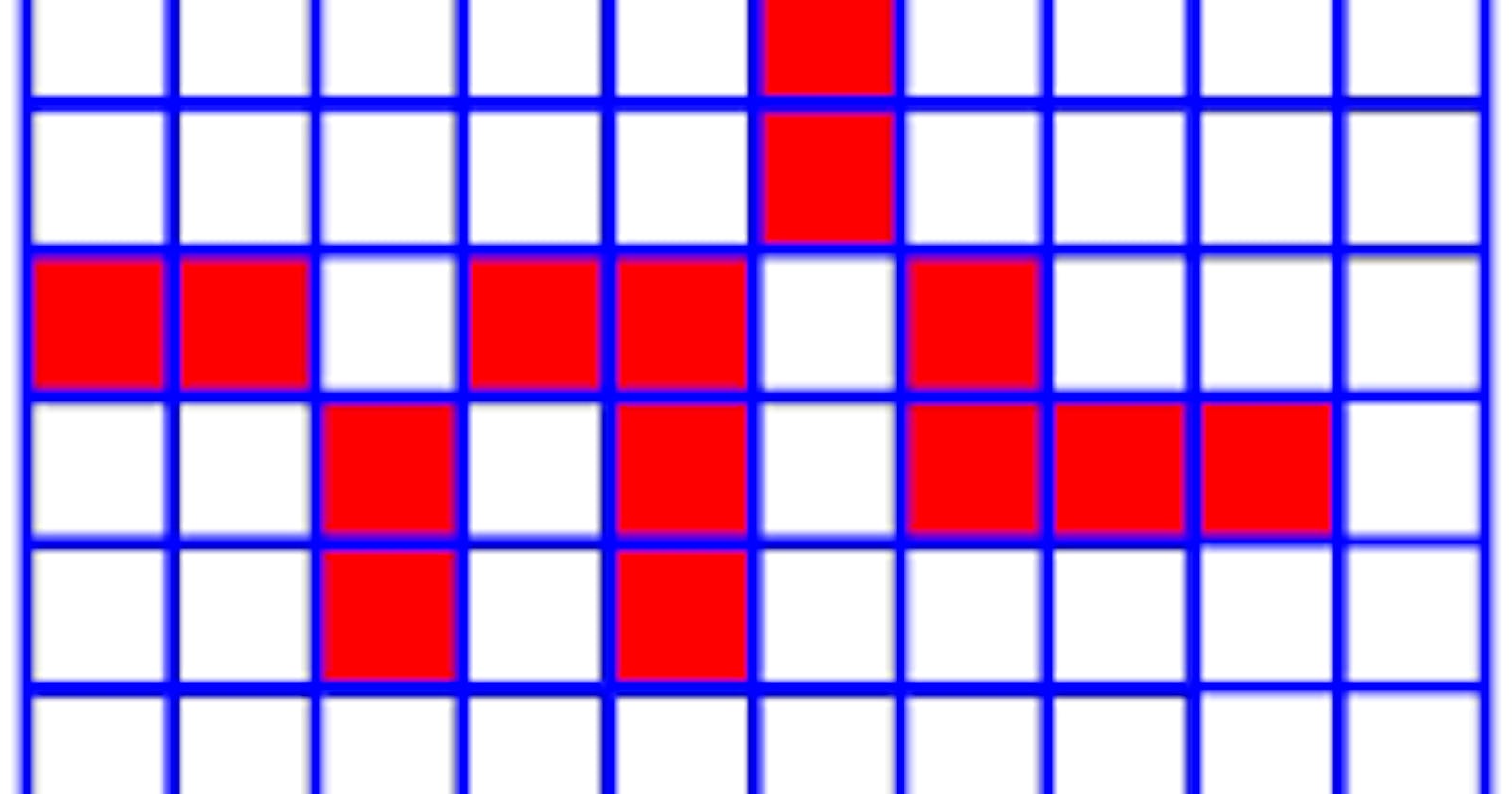

We can visualize the maze and the shortest path using the matplotlib library:

import matplotlib.pyplot as plt

path = find_shortest_path(q_table)

x, y = zip(*path)

plt.imshow(maze, cmap='gray', interpolation='none')

plt.plot(y, x, color='red', linewidth=2)

plt.xticks([]), plt.yticks([])

plt.show()

The matplotlib the library is used to display the maze and the shortest path as a red line.

Conclusion

In this blog post, we implemented the Q-learning algorithm to solve the Rat in a Maze problem using reinforcement learning. We used NumPy to represent the Q-table and implemented functions to choose the next action, update the Q-values, and find the shortest path. We also visualized the maze and the shortest path using the matplotlib library.

Reinforcement learning is a powerful technique that can be used to solve a wide range of problems. The Q-learning algorithm is a simple and effective method for learning optimal policies in discrete environments. By applying reinforcement learning to the Rat in a Maze problem, we demonstrated the basic principles of Q-learning and how they can be used to solve real-world problems.

Project Link: rat-in-a-maze

Thank you guys, for reading this blog. :)