Table of contents

No headings in the article.

Time series is a crucial component of modern machine learning techniques, and has become increasingly important in many areas of research and business. A time series is a sequence of data points that are recorded at regular intervals over time, and it can be used to forecast future events or identify patterns in historical data. In this blog, we will explore what time series data is, how it is used in machine learning, and some common algorithms used in time series analysis.

What is Time Series Data?

Time series data is a collection of observations made sequentially over time. It can be used to model trends, patterns, and changes in a system over time. For example, stock prices, weather patterns, and sales data can all be represented as time series data. Time series data can be univariate, meaning it consists of a single variable, or multivariate, meaning it consists of multiple variables that are observed over time.

How is Time Series Used in Machine Learning?

Time series analysis is used to make predictions about future events based on historical data. It can be used in a variety of applications, including finance, marketing, and engineering. Machine learning algorithms can be used to analyze time series data and identify patterns, relationships, and trends in the data. Some common applications of time series analysis in machine learning include:

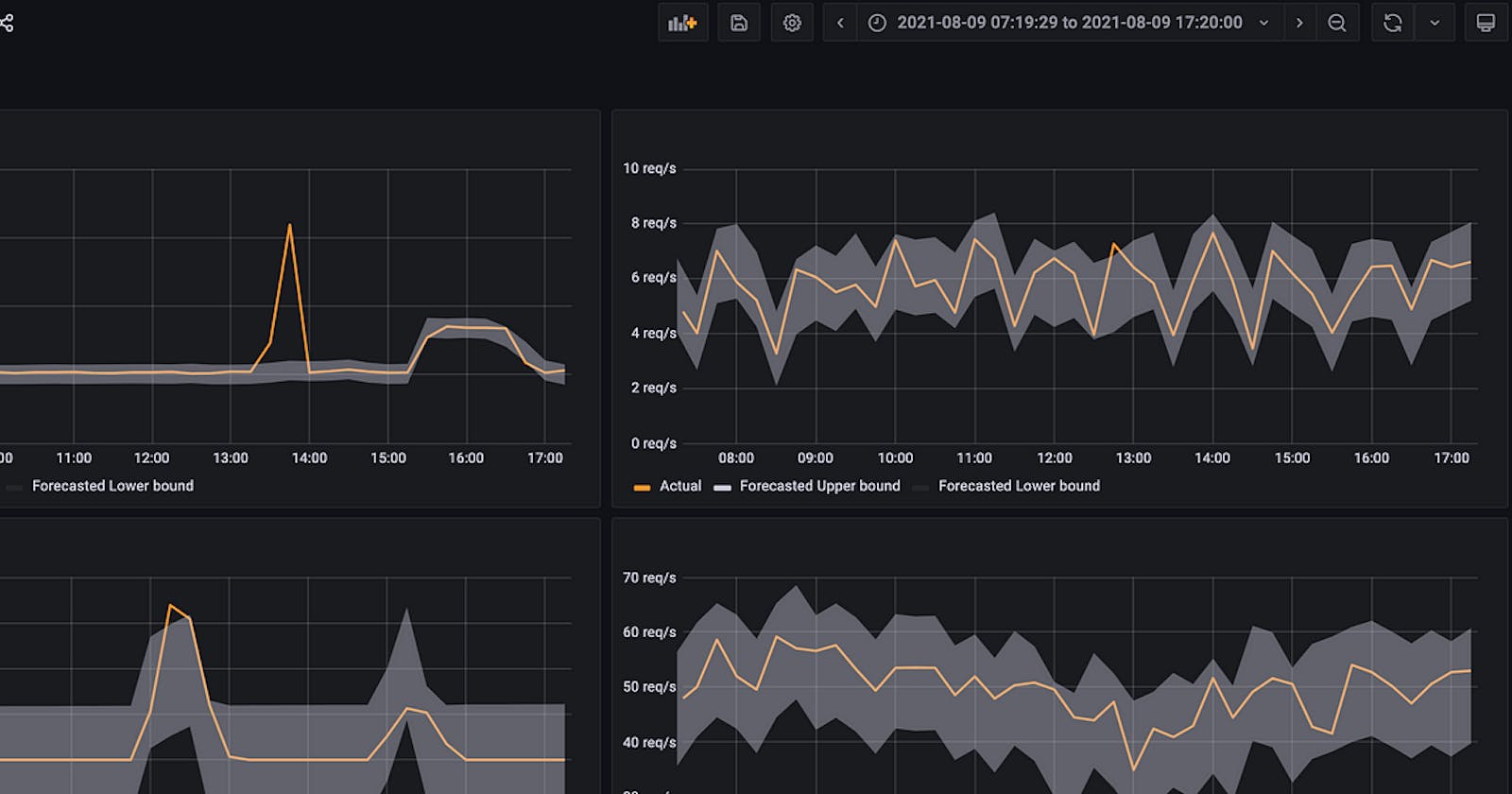

Forecasting future values based on past trends.

Detecting anomalies or outliers in the data.

Identifying seasonal patterns in the data.

Classifying time series data based on its characteristics.

Common Algorithms Used in Time Series Analysis

Autoregressive Integrated Moving Average (ARIMA): This algorithm is used to model the time series data based on its past values. It is commonly used for forecasting future values of the time series data.

Seasonal Autoregressive Integrated Moving Average (SARIMA): This algorithm is an extension of ARIMA that takes into account the seasonal nature of the data.

Long Short-Term Memory (LSTM): This algorithm is a type of recurrent neural network that is used to model long-term dependencies in time series data.

Prophet: This algorithm is a forecasting tool developed by Facebook that is designed for time series data with seasonal and trend components.

Dynamic Time Warping (DTW): This algorithm is used to compare two-time series data sets that may have different time scales or lengths.

Conclusion

Time series data is a critical component of modern machine learning techniques, and it has become increasingly important in many areas of research and business. In this blog, we explored what time series data is, how it is used in machine learning, and some common algorithms used in time series analysis. Time series analysis is a powerful tool for making predictions about future events based on historical data, and it can be used in a wide range of applications.

Thank you for reading this :)